HOUSTON—I had enough to worry about as Hurricane Harvey plowed into the Texas Gulf Coast on the night of August 25 and delivered a category 4 punch to the nearby city of Rockport. But I simultaneously faced a different kind of storm: an unexpected surge of traffic hitting the Space City Weather Web server. This was the first of what would turn into several very long and restless nights.

Space City Weather is a Houston-area weather blog and forecasting site run by my coworker Eric Berger and his buddy Matt Lanza (along with contributing author Braniff Davis). A few months before Hurricane Harvey decided to crap all over us in Texas, after watching Eric and Matt struggle with Web hosting companies during previous high-traffic weather events, I offered to host SCW on my own private dedicated server (and not the one in my closet—a real server in a real data center). After all, I thought, the box was heavily underutilized with just my own silly stuff. I'd previously had some experience in self-hosting WordPress sites, and my usual hosting strategy ought to do just fine against SCW’s projected traffic. It’d be fun!

But that Friday evening, with Harvey battering Rockport and forecasters predicting doom and gloom for hundreds of miles of Texas coastline, SCW’s 24-hour page view counter zipped past the 800,000 mark and kept on going. The unique visitor number was north of 400,000 and climbing. The server was dishing out between 10 and 20 pages per second. The traffic storm had arrived.

It was the first—but not the last—time over the next several days that I’d stare at the rapidly ticking numbers and wonder if I’d forgotten something or screwed something up. The heavy realization that millions of people (literally) were relying on Eric's and Matt's forecasting to make life-or-death decisions—and that if the site went down it would be my fault—made me feel sick. Was the site prepared? Were my configuration choices—many made months or years earlier for far different requirements—the right ones? Did the server have enough RAM? Would the load be more than it could handle?

Wild blue yonder

On a normal day with calm skies, Space City Weather sees maybe 5,000 visitors who generate maybe 10,000 page views, max. If there’s a bad thunderstorm on the horizon, the site might see twice that many views as folks try to check in and get weather updates.

Hurricane Harvey managed to bring in traffic at about 100x the normal rate for several days, peaking at 1.1 million views on Sunday, August 27 (that's not a cumulative number—that's just for that one day). Every day between August 24 and August 29, the site had at least 300,000 views, and many had far more. Between Eric’s first Harvey-related post on August 22 and the time the whole mess was finally over a week later, Space City Weather had served 4.3 million pages to 2.1 million uniques—and the only downtime was when I called the hosting company on August 25 to have the server re-cabled to a gigabit switch port so we’d have more burst capacity.

Some of the site’s staying power is due to simplicity: SCW is a vanilla self-hosted WordPress site without much customization. There’s nothing terribly special about the configuration—the only bits of custom code are a few lines of PHP I copied off of the WordPress Codex site in order to display the site’s logo on the WordPress login screen, and another bit strips query strings from some static resources to help the cache hit rate. The site runs an off-the-shelf theme. Including the two bits of custom code, the site uses a total of eight plug-ins, rather than the dozens that many WP sites saddle themselves with.

I’d like to be an immodest rock star sysadmin and claim that I customized my hosting specifically around some prescient guess at SCW's worst-case traffic nightmare scenario, but that would be a lie. A lot of what kept SCW up was a basic philosophy of “cache the crap out of everything possible in case traffic arrives unexpectedly.” The SCW team usually posts no more than twice a day, and the site uses WordPress’ integrated comment system. The site is therefore aptly suited to take advantage of as many levels of caching as possible, and my setup tries to cram cache in everywhere.

Any sysadmin who has spent a bit of time deploying Web apps in a non-simple environment can tell you that configuring cache properly can take years off your life; you can burn a ridiculous amount of time tracking down edge cases and handling the exclusions. Cache at multiple levels in your stack makes for annoyances and extra configuration changes when setting up new sites, and it can greatly complicate deployment troubleshooting.

But in this case, it’s what saved the site from drowning in the traffic tidal wave.

How to have a hurricane (server) party

From a hosting perspective, engineering a site to handle SCW’s normal daily traffic load is easy—you could do it with a Digital Ocean droplet or a free Amazon AWS micro instance. But building in capacity to serve a million views a day requires a different vision—you can’t just throw that much traffic at an AWS micro and have it live.

Fortunately, when I offered to take over SCW’s hosting in July, I didn’t have to worry very much about the base requirements. A few years ago, I wrote a series for Ars called Web Served, which focused on walking readers through some basic (and some not-so-basic) Web server setup tasks. The guide is sorely out of date, and I’m planning on updating it. So to facilitate that update and give me a big sandbox to play in, late in 2016 I acquired a dedicated server at Liquid Web in Michigan and migrated my little closet data center onto it.The box was happy serving my personal domain and a few other things—namely the Chronicles of George and Fangs, an Elite Dangerous webcomic—but it was grossly underutilized and essentially sitting idle. Except for the occasional Reddit hug when the Chronicles of George got mentioned in the Tales from Tech Support subreddit, traffic was generally no more than a few hundred page views per day across all sites.

Still, as someone who suffers from an irresistible urge to tinker, I’d spent the past several years screwing around with and blogging about adventures in Web hosting. In order to satisfy my own curiosity on how stuff works, I’d managed to cobble together a reasonably Reddit-proof Web stack—again, not through requirements-based planning, but just through the urge to have neat stuff to play with. Through luck or chance, this stack ended up being just the thing to soak up hurricane traffic.

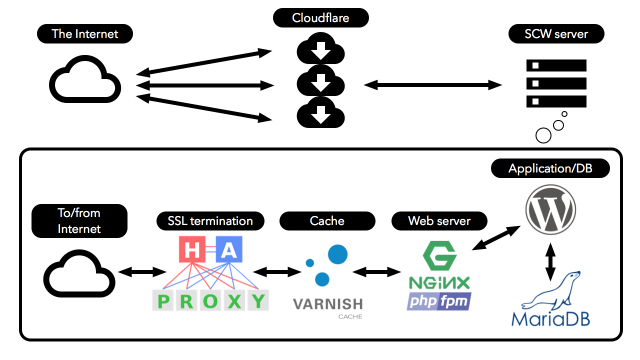

By the time Hurricane Harvey started making life difficult, here’s what Space City Weather was running on, sharing server space with my other sites:

Every layer here played a role in keeping the site up. If you’d rather just have the “tl;dr” version of how to sustain a lot of traffic on a single server, here it is:

- Dedicated hardware server—no shared hosting

- Gigabit uplink to handle burst traffic

- Varnish cache with lots of RAM

- Plugin-light WordPress installation

- Off-box stats via WordPress/Jetpack

- Cloudflare as a CDN

- No heavy dependencies (i.e., ad networks)

Now, let’s get to the details.

reader comments

67